Artificial intelligence is booming like anything now. Individuals, small businesses, large corporations and even freelancers are using AI to automate processes, tasks and more. The goal is to work smarter, not harder. ChatGPT is the leader and first Generative AI model that came to the market. But one tool that has caught attention is DeepSeek. In this blog, we will explain how different DeepSeek models work and their differences.

DeelSeek was created by Hangzhou DeepSeek Artificial Intelligence Co., Ltd., a Chinese firm that works on AI. Liang Wenfeng founded DeeSeek in July 2023. The project was funded by a Hedge fund ”High Flyer“. It is the cheapest generative AI compared to OpenAI’s ChatGPT.

Let’s dive into the blog.

4 DeepSeek Models

DeepSeek has 4 major models,

- DeepSeek V3

- DeepSeek R1

- DeepSeek VL

- DeepSeek LLM

Apart from these main 4, there are 4 other models which are extended/old versions of these main models. They are DeepSeek Coder V2, DeepSeek V2, DeepSeek Coder, DeepSeek Math.

DeepSeek-V3

I call it “The Smaty Helper”, it’s like a super-knowledgeable friend who can chat, answer questions, and help with writing, homework, or even creative stories.

DeepSeek-V3 is one of the most advanced AI models available today. It is designed to be a knowledgeable, versatile, and user-friendly assistant. Whether you’re a student, writer, researcher, or just curious, DeepSeek-V3 can help with explanations, creative writing, coding, and more.

Best for:

- Everyday questions (like “How does photosynthesis work?”)

- Writing essays, emails, or blog posts (like this one!)

- Explaining things in simple terms

Release Date

- Release Date: Early 2024 (exact date not publicly specified).

- Purpose: To provide a powerful yet easy-to-use AI for general knowledge, writing, coding, and problem-solving.

- DeepSeek AI focuses on making cutting-edge AI accessible without requiring technical expertise.

How Was DeepSeek-V3 Built?

DeepSeek-V3 is a Large Language Model (LLM), meaning it was trained on a massive amount of text data (books, articles, websites, etc.) to understand and generate human-like responses.

Key Technical Details:

- Model Size: Likely hundreds of billions of parameters (the “brain cells” of the AI)

- Training Data: Includes diverse, high-quality text up to mid-2024

- Architecture: Based on Transformer neural networks (like GPT-4)

- Features:

- 128K context window (can remember long conversations).

- Supports file uploads (PDFs, Word, Excel, etc.).

- Web search capability (for up-to-date answers).

What Makes DeepSeek-V3 Special?

| Features | Why It Matters |

|---|---|

| Strong Reasoning | Solves complex logic & math problems better than many AIs. |

| Long Memory (128K tokens) | Can read & analyze entire books or long documents. |

| Free to Use | Unlike some competitors (ChatGPT-4), it’s completely free! |

| Supports File Uploads | Extract text from PDFs, analyze data, summarize reports. |

| Web Search (when enabled) | Gets the latest info instead of only pre-2024 knowledge. |

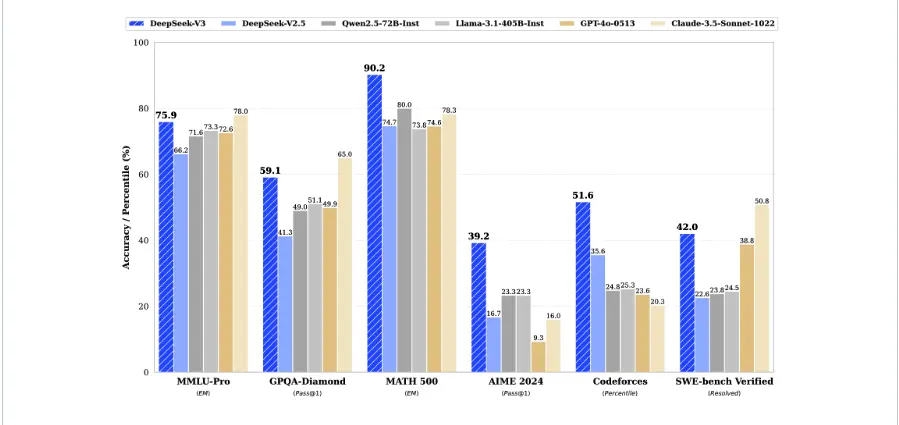

DeepSeek-V3 Compared to Other AI Models

| Model | Best For | Context Memory | Free/Paid | Web Search | File Support |

|---|---|---|---|---|---|

| DeepSeek-V3 | General use, research, coding | 128K | Free | (Manual) | Yes |

| ChatGPT-4 | Creative writing, general chat | 32K | Paid | (Paid) | Yes |

| Claude 3 | Long documents, analysis | 200K | Paid | No | Yes |

| Gemini 1.5 | Multimodal (text + images) | 1M | Paid | Yes | Yes |

DeepSeek-V3 stands out because:

- It’s free (unlike GPT-4 & Claude 3).

- Longer memory than ChatGPT-4.

- File support & optional web search.

What Can You Do With DeepSeek-V3

Here are some real-life uses:

Learning & Education

- Explain complex topics simply (“How does the heart work?”).

- Solve math problems step-by-step.

- Summarize long articles or textbooks.

Writing & Content Creation

- Write blog posts, essays, or stories.

- Improve grammar & rewrite sentences.

- Generate ideas for projects.

Coding & Tech Help

- Explain programming concepts.

- Debug or write simple code.

- Teach the basics of Python, HTML, etc.

File Analysis

- Extract text from PDFs, Word, and Excel.

- Summarize research papers.

- Analyze data trends in spreadsheets.

DeepSeek-R1

DeepSeek-R1 is a specialized AI model designed for advanced research, technical analysis, and deep knowledge tasks. Unlike general-purpose models, R1 excels at processing complex information, making it ideal for scientists, engineers, and professionals who need highly accurate, detailed answers.

Let’s break down its creation, technical specs, and unique capabilities in an easy-to-understand way.

Release Date

- Release Date: 2024

- Purpose: To serve as a high-performance research assistant for tackling scientific, legal, and technical challenges.

- DeepSeek R1 bridges the gap between general AI chatbots and expert-level knowledge systems

The Science Behind DeepSeek-R1

DeepSeek-R1 is a Large Language Model (LLM) but is fine-tuned for precision and depth rather than casual conversation.

Key Technical Details:

- Model Size: Likely larger than DeepSeek-V3 (possibly in the range of hundreds of billions to trillions of parameters).

- Training Data: Focused on scientific papers, technical documents, legal texts, and high-quality research material.

- Architecture: Uses a Transformer-based neural network, optimized for logical reasoning and factual accuracy.

- Special Features:

- Stronger reasoning for math, physics, and engineering problems.

- Better at handling long, complex documents (research papers, patents, legal cases).

- Less “creative guessing”—aims for higher factual correctness.

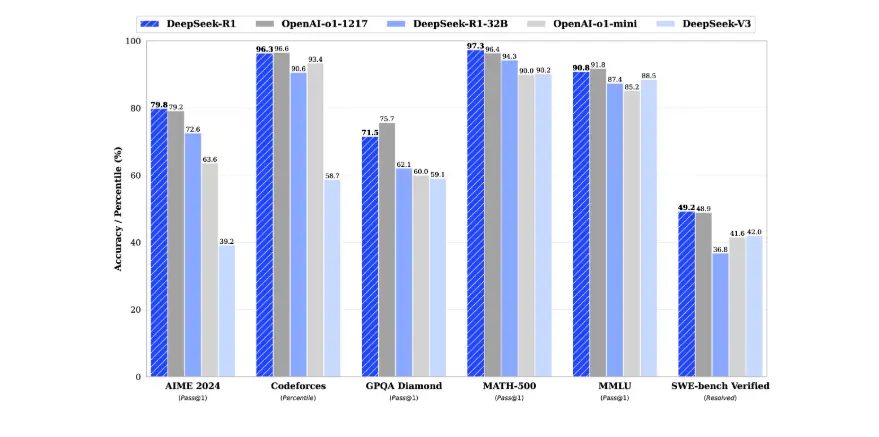

What Makes DeepSeek-R1 Special

| Features | Why It Matters |

|---|---|

| Research-Optimized | Trained on academic & technical sources, not just general web data. |

| High Precision | Minimizes errors in scientific, legal, or engineering explanations. |

| Deep Analysis | Can break down complex theories into structured explanations. |

| Long-Context Processing | Reads & understands entire research papers in one go. |

| Supports Citations | Can provide references or sources for key facts (when available). |

DeepSeek-R1 Compared to Other AI Models

| Model | Best For | Research Depth | Coding Skills | Free/Paid | Long-Context Support |

|---|---|---|---|---|---|

| DeepSeek-R1 | General use, research, coding | ⭐⭐⭐⭐⭐ | ⭐⭐⭐ | Free | (128K+) |

| DeepSeek-V3 | Creative writing, general chat | ⭐⭐⭐ | ⭐⭐⭐⭐ | Free | (128K) |

| ChatGPT-4 | Long documents, analysis | ⭐⭐⭐⭐ | ⭐⭐⭐⭐ | Paid | (32K max) |

| Claude 3 Opus | Document analysis, reasoning | ⭐⭐⭐⭐ | ⭐⭐ | Paid | (200K) |

DeepSeek-R1 stands out because:

- It’s free (unlike GPT-4 Turbo & Claude 3 Opus).

- More accurate for research than general-purpose models.

- Handles long technical documents better than most AIs.

What Can You Do With DeepSeek-R1?

Scientific & Technical Research

- Summarize complex research papers in simple terms.

- Explain advanced physics/math concepts (e.g., quantum mechanics).

- Help with engineering or medical research.

Legal & Compliance

- Analyze legal contracts or patent filings.

- Compare different laws or regulations across countries.

- Simplify legal jargon into plain language.

Data & Technical Analysis

- Interpret statistical research findings.

- Assist in scientific coding (Python, MATLAB, R).

- Explain machine learning or AI research papers.

Academic Writing

- Help structure research proposals or theses.

- Suggest credible sources for citations.

- Proofread technical manuscripts.

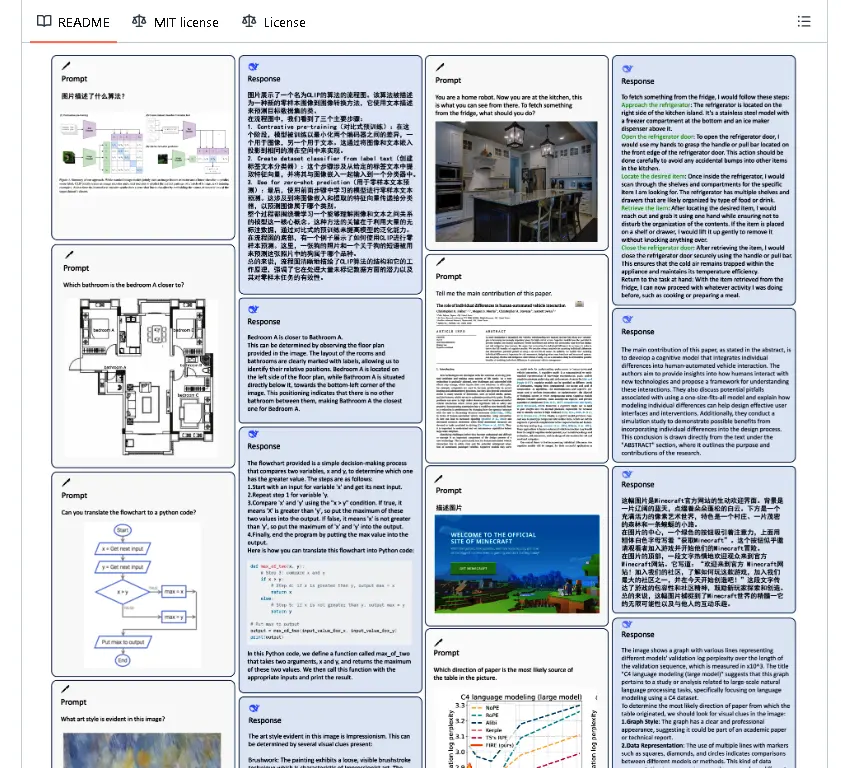

DeepSeek-VL

DeepSeek-VL represents a groundbreaking advancement in multimodal AI, combining visual understanding with natural language processing. Unlike text-only models, VL can analyze images, charts, and diagrams while maintaining strong conversational abilities. This makes it invaluable for education, design, accessibility, and technical fields requiring visual comprehension.

Core Specifications & Release Date

- Release Date: Early 2024 (following V3 launch)

- Model Type: Vision-Language Foundation Model

- Architecture: Hybrid transformer (ViT + LLM)

- Training Data:

- 500M+ image-text pairs

- Scientific diagrams, web images, and document scans

- Synthetic data for robustness

Technical Architecture

DeepSeek-VL employs a dual-encoder system:

- Vision Transformer (ViT) – Processes pixel data into embeddings

- Language Model – Interprets visual data contextually

- Cross-Modal Attentio – Enables bidirectional understanding

Key Parameters:

- Image encoder: 4B parameters

- Language model: 70B+ parameters

- Joint training loss: Contrastive + Generative

Unique Capabilities

| Feature | Advantage | Use Case Example |

|---|---|---|

| Document OCR+ | Reads text in context | Extracting data from invoices |

| Diagram Reasoning | Understands flowcharts | Explaining engineering schematics |

| Cultural Context | Recognizes memes/art | Analyzing historical paintings |

| Precision Captioning | Detailed descriptions | Alt-text generation for accessibility |

Performance Benchmarks

- TextVQA: 78.5% accuracy (vs. GPT-4V 72.3%)

- DocVQA: 83.2% (financial documents)

- COCO Captioning: CIDEr 128.7

- ScienceQA: 92.4% (diagram questions)

Training Methodology

- Pretraining Phase:

- 10,000+ GPU hours on A100 clusters

- Curriculum learning (simple→complex images)

- Adversarial samples for robustness

- Alignment:

- RLHF with human visual feedback

- Bias mitigation through dataset balancing

Real-World Applications

- Education:

- Solves math problems from photos

- Explains science diagrams step-by-step

- E-Commerce:

- Visual product search

- Automated catalog tagging

- Accessibility:

- Real-time scene description

- Document reading for visually impaired

- Research:

- Data extraction from research papers

- Chemical diagram interpretation

Comparative Advantage

| Model | Image Input | Text Understanding | Free/Paid | Max Resolution |

|---|---|---|---|---|

| DeepSeek-VL | ✔ | ⭐⭐⭐⭐⭐ | Free | 1024px |

| GPT-4V | ✔ | ⭐⭐⭐⭐ | Paid | 512px |

| LLaVA | ✔ | ⭐⭐⭐ | Free | 336px |

| Gemini 1.5 | ✔ | ⭐⭐⭐⭐ | Paid | 1536px |

DeepSeek-VL stands out because:

- No pixel limit for document analysis

- Chinese/English bilingual visual understanding

- Open weights for research (partial)

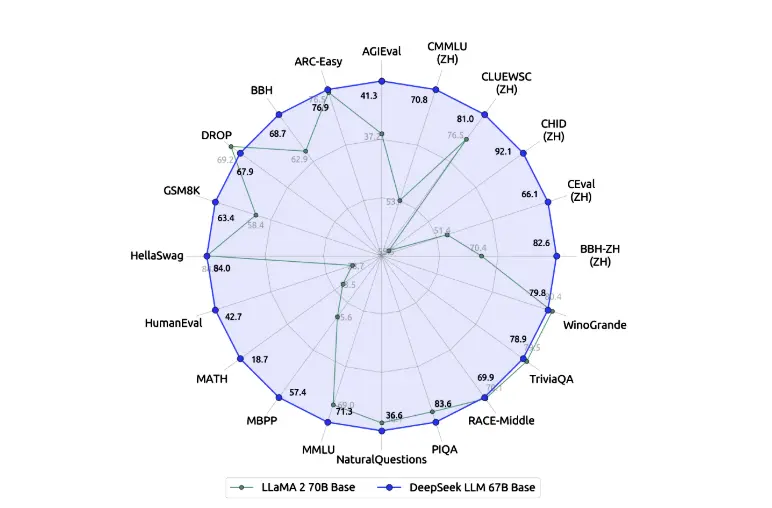

DeepSeek LLM

DeepSeek LLM serves as the core architecture powering DeepSeek’s AI systems, including V3 and R1 variants. This foundational model represents a significant leap in Chinese-English bilingual capabilities while maintaining state-of-the-art performance across diverse language tasks.

Development Timeline

- Initial Research: Q2 2023

- First Release: January 2024 (v1.0)

- Current Version: v2.1 (June 2024)

Model Specifications

| Parameter | Specification |

|---|---|

| 4.2T (Chinese: English = 3:2) | Dense Transformer |

| Parameters | 67B (base variant) |

| Context Window | 128K tokens |

| Training Tokens | 4.2T (Chinese:English = 3:2) |

| Precision | bf16 + Grouped-Query Attention |

| Positional Encoding | Rotary (RoPE) |

Technical Innovations

1. Hybrid Training Approach

- Phase 1: Standard next-token prediction

- Phase 2: Task-specific instruction tuning

- Phase 3: RLHF with 50K human preference samples

2. Unique Features

- Dynamic NTK Scaling: Enables stable 128K context

- Bilingual Tokenizer: 128K vocabulary (optimized for CJK)

- MoE Variants: 16 experts, 4 active (215B total params)

Performance Metrics

| Benchmark | Score | Comparison |

|---|---|---|

| C-Eval | 85.7% | +12% vs LLaMA2-70B |

| MMLU | 75.2% | Comparable to GPT-3.5 |

| GSM8K | 82.5% | +8% vs Chinchilla |

| CLUE | 83.1% | SOTA Chinese |

Efficiency Metrics:

- 18.5 tokens/sec on A100 (16-bit)

- 65% reduced inference cost vs comparable models

Training Infrastructure

- Hardware: 1024× NVIDIA A100 (40GB)

- Training Time: 21 days continuous

- Energy Cost: ≈1.2M kWh (carbon offset)

- Dataset Composition:

- 45% Web documents

- 30% Academic papers

- 15% Code

- 10% Structured data

Language Capabilities

Bilingual Proficiency:

- Chinese: Handles classical/modern texts

- English: Native-level comprehension

- Code Switching: Seamless transitions

Specialized Skills:

- Legal document analysis

- Mathematical proof writing

- Technical documentation

Comparative Analysis

| Model | Params | Languages | Context | Open Weight |

|---|---|---|---|---|

| DeepSeek LLM | 67B | ZH/EN | 128K | Yes |

| LLaMA 3 | 70B | Multi | 8K | No |

| GPT-4 Base | ~1T | Multi | 32K | No |

| Mistral | 56B | EN/EU | 32K | Yes |

DeepSeek-LLM stands out because:

- Largest open Chinese model

- Cost-effective inference

- Enterprise-ready safety features

Why DeepSeek LLM Matters

This foundational model demonstrates that:

- Chinese-language AI can rival global counterparts

- Open-weight models can achieve commercial-grade performance

- Efficiency innovations reduce environmental impact

DeepSeek LLM represents a strategic milestone in sovereign AI development. It offers organizations a viable alternative to other models while maintaining exceptional bilingual capabilities.